Not sure if this was ever brought to light, or if there were any posts already made talking about it but here it is.

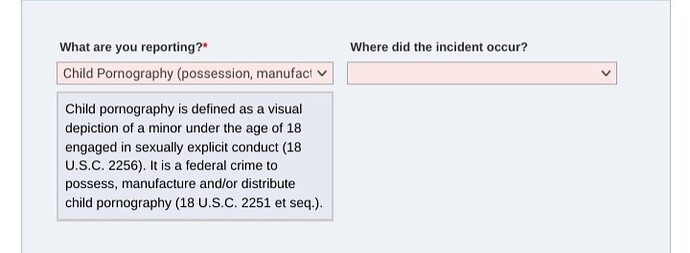

I found it on Google while researching whether, like INHOPE, the US-based nonprofit NCMEC treats anime/manga as criminal CSAM if reported as such.

https://report.cybertip.org/ispws/documentation/#common-types

This is the technical documentation for how the NCMEC CyberTipline functions, what info it includes, how it tags that info, etc.

C.1. File Annotations

Tags to describe a file.

<animeDrawingVirtualHentai>

0|1

The file is depicting anime, drawing, cartoon, virtual or hentai.

Obviously, this is an issue of concern if you live in the US because it means that their tagging system is equipped to identify fictional/artistic works from actual CSA materials.

For whatever purpose, though, is unclear. NCMEC, in both their CyberTip reporting system and relevant media/blog posts, claims their focus is limited to what can only be legally identified as CSA materials (18 USC 2256 and a8 USC 2251 et seq.)

Federal law specifically excludes artistic depictions made without the use of minors from this definition, such as drawings, sculptures, paintings, characters played by youthful adults, etc.

So tell me, are they treating drawings of fictional characters, made without the involvement of minors, clearly and concisely excluded from law as CSA materials in the US?

Or is this to aid other countries where such materials are illegal?