Eight states are weighing anti-pornography bills that would force phone and tablet manufacturers like Apple and Samsung to automatically enable filters that censor nude and sexually explicit content.

The only way to disable the filters, according to the bills introduced this year, would be through passcodes. Providing such a passcode to a child would be forbidden, except when done by a parent.

Specifically, the bills say, the phone filters must prevent children from downloading sexually explicit content via mobile data networks, applications owned and controlled by the manufacturer, and wired or wireless internet networks.

Many device manufacturers already have adult content filters available for use, though it is not the norm to have them turned on by default. Many phone makers, for instance, allow parents to easily enable filters on web browsers that prevent children from navigating to websites known to host pornography.

In recent years, some phone makers have added sophisticated filters that use artificial intelligence to censor individual images on certain applications.

One of these anti-pornography bills was passed into law in 2021 in Utah but cannot go into effect unless five additional states pass similar laws — a provision included to prevent Big Tech companies from isolating the state after passing the law. This year, Florida, South Carolina, Maryland, Tennessee, Iowa, Idaho, Texas and Montana lawmakers are all considering versions of the bill, with Montana and Idaho’s versions being furthest along in the process.

In interviews with NBC News, the authors of the original blueprint legislation — representatives from the National Center on Sexual Exploitation and Protect Young Eyes, both advocacy organizations focused on child safety — said that the original intention of the model bill was to compel device manufacturers to automatically turn on adult filters for web browsers and not other applications. Those filters were already on phones, but not on by default, in 2019 when the draft legislation was first created.

But Chris McKenna, founder and CEO of Protect Young Eyes, acknowledged that the legislation could also end up applying to other device-level filters created in recent years that some might consider more invasive.

In 2021, Apple introduced filters to devices that can scan messages for nudity, blurring any suspected nude images for people who had the filters turned on. The filter, which can be enabled for children by an adult administrator, also offers to connect users to parents or help resources.

Most of the state bills under consideration would make device manufacturers liable for criminal and civil penalties if they don’t have filters automatically enabled that meet “industry standards.” The bills do not define what that standard is or if messaging filters are included.

McKenna said that “the intention is meant to point toward the browsers and the [search] engines that have the filters already in place.” But, he noted, “you wouldn’t find me upset if they chose to turn that on for iMessage.”

Benjamin Bull, the general counsel for the National Center on Sexual Exploitation, said that when he crafted the language of the original model bill, it was designed to narrowly address the issue of child access to internet pornography in a way that avoided potential court challenges.

After it was drafted, Bull says that NCOSE provided it to various interested parties across the country, and that it eventually found a home in Utah.

“We gave it to some constituents in Utah who took it to their legislators, and legislators liked it,” Bull said.

Since the bill’s passage in Utah, Bull and McKenna said, various interested parties have reached out to them in an effort to bring the bill to their own states.

“I mean, almost on a daily basis, from constituents, from legislators. ‘What can we do? We’re desperate. Do you have a model bill? Can you help us?’ And we said, ‘as a matter of fact, we do,’” Bull said.

Erin Walker, public policy director of Montana child safety organization Project STAND, said that she learned about the bill through a presentation McKenna gave to a child safety coalition. She said she reached out to McKenna, who helped her get it introduced to Montana lawmakers.

The bill, she noted, was part of a series of legislation in Montana aimed at pornography.

“In 2017, we passed HB 247, which established that showing sexually explicit material to a child constitutes sexual abuse. And then in 2019, we passed a resolution declaring pornography to be a public health hazard in the state of Montana,” she said.

The bill is also part of a wave of legislation across the country aimed at regulating Big Tech.

“I think it’s just that Big Tech doesn’t want to be regulated,” Walker said. “We have to convince legislators that there is an appropriate amount of regulation in every industry.”

Proponents of the bills say that signing them into law would be an incremental step for tech companies to take, claiming that new filters wouldn’t be required and that other onerous procedures like age verification wouldn’t be necessary because of how most of the bills are written.

But differences in languages state by state have created questions for watchdogs about what hoops manufacturers might have to jump through to meet each state’s requirements.

Montana’s bill’s language, for instance, appears to suggest that age verification would be required for manufacturers to avoid potential lawsuits or prosecution. According to the draft of the bill, a manufacturer is liable if “the manufacturer knowingly or in reckless disregard provides the passcode to a minor.”

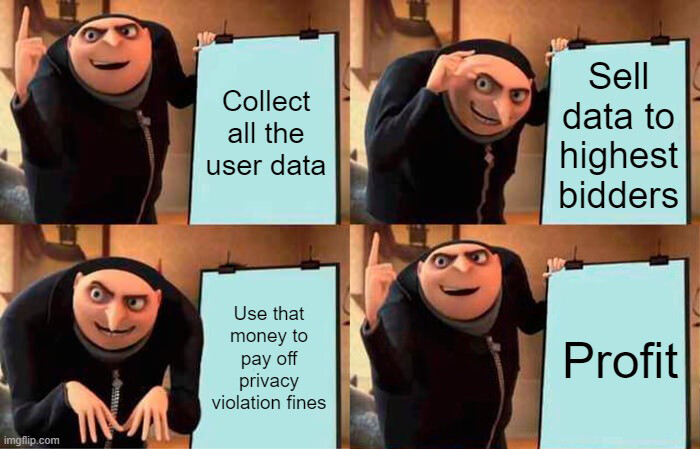

Samir Jain, VP of policy at the Center for Democracy and Technology, said the inclusion of such language poses concerns around user privacy and data protection. In theory, manufacturers could be forced to collect age data from customers, via government ID and other forms of identification.

“There are no restrictions as such on how providers can then use this data for other purposes. So even the sort of age verification aspect of this, I think, both creates burdens and gives rise to privacy concerns,” Jain said.

Jain also noted that the filter bills create concerns around free expression.

“I think we have to recognize that filters like these certainly with current technology are far from perfect. They can’t distinguish, you know, for example, nudity that’s prurient or of a sexual nature versus nudity that’s for artistic or other purposes, which the bills at least purport to exempt from regulation,” Jain said. “So any requirements put in filters, necessarily will mean that those filters will catch lots of images and other material that even the authors of the bill would say shouldn’t be restricted.”

Jain said that he believes that, given the nuances of what is acceptable viewing material for differently aged children, filters should be deliberately tuned and applied by parents themselves.

“What’s appropriate or useful for a teenager versus a 6 year-old are quite different,” he said. “That’s why I think the provision of different kinds of tools and capabilities that can then be tailored, depending on the circumstances makes a lot more sense than sort of a crude mandatory filtering.”

I see NCOSE have their finger in yet another pie…