And we just had a thread about NCOSE recently:

I don’t have anything in particular to add, so, um…

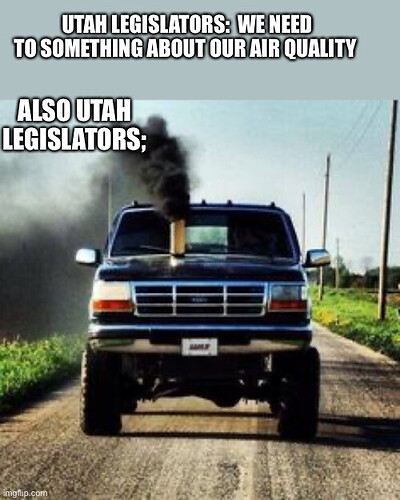

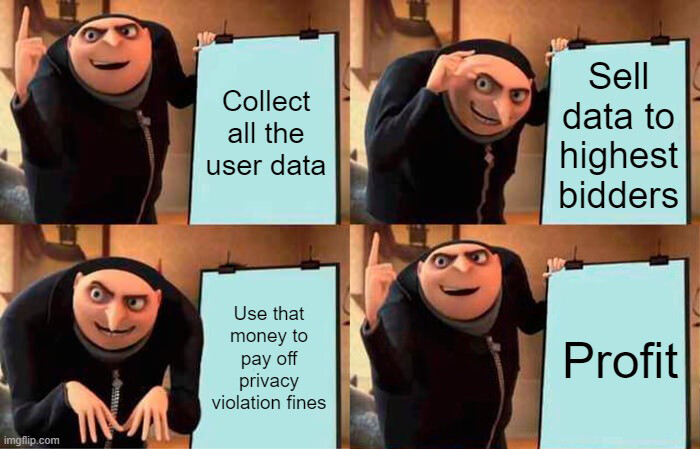

Because if they can’t ban pornography, they’ll try to restrict access to it as much as they can, apparently.

Yeah, that sounds promising…

No no, see, it’s going to make all the difference, for them:

Well, to be fair, lolicon IS art. But, will it be regulated? What are the chances of that happening? Probably high, right?

5 Likes