That is strange… Part of me is deathly afraid of how this debate will turn out.

Obviously, CSAM scanning at the local level is problematic because it opens a Pandora’s box of sorts. It sets a precedent that may enable further encroachment into the private lives of people and the deterioration of consumer privacy barriers, all the while causing unspoken yet palpable damage to the longstanding privacy consensus.

I see value in using CSAM detection methods, like PhotoDNA, to detect, remove, and report known CSAM. That has real application in helping end the sexual exploitation of children, as it helps eliminate harmful material whose existence will only drive up demand for further sexual exploitation and abuse against children.

Yet… I’m not sure how - or why - Apple would feel the need to implement something like this at the local level if it can be done server-side. The fact that such things would only function if media were synced up with iCloud paints an interesting gloss over everything, as if Apple knows the kind of fire they’re playing with here.

Personal computers, smartphones, etc. are, in many ways, extensions of the self. Their contents and how they’re used or interacted with always bear a significant relationship with their users, in much the same way one’s own person, their possessions, or their papers and effects have.

Privacy matters. Without it, people can’t be people if they believe that their every move, keystroke, or thing their device renders, sees, or hears can be used against them.

People need privacy and freedom to think logically and clearly. To be true to themselves, and to understand themselves. This level of self-actualization in relation to interaction with information and communications technology is, in part, why first-world America has been able to achieve so much. If that sense of personal privacy and security is threatened, undermined, or negatively implicated in any way, we may see the death of rational thinking.

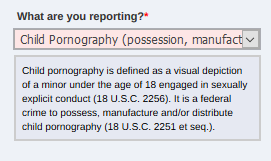

Of course, nobody should be allowed to participate in a market that is contingent on the sexual abuse and exploitation of children. The commoditization of child sexual abuse, the trauma, the suffering, all of it bears an intrinsic link to the material in question.

Legal prohibition on even the mere possession of such materials is justified. The right to privacy does not extend to the participation of this market, even at that level.

If a person is caught with CSAM stored locally, rather than in the cloud, then it really matters not. They deserve whatever punishment they get. They had to access the Internet in order to obtain it, or they had to have acquired it from a someone who obtained it through similar means. And even still - it’s sexual abuse involving real children.

At the end of the day, one core question looms over.

Is sacrificing or risking respect for user privacy like this a valid trade-off in the war against CSAM?

In the end, I don’t think so. I think the benefits of preserving and maintaining user privacy outweigh the benefits of leveraging local drive access as a means to strengthen prohibitions on CSAM. I think it’s quite dangerous to that goal, if anything.

I don’t think it’s a stretch or reach to say that, in the long run, such a trade-off would actually only cause more harm to children and victims of abuse.

Allow me to explain.

If your phone or computer is monitoring the contents of your local storage, scanning images and video and for CSAM and running your messages, documents, notes, etc. through an AI designed to detect pedophilic or CSAM-related contents, then it’s likely that the contents of a person’s web browser (history, bookmarks, cache) would be under identical scrutiny.

People are already afraid to google information related to child pornography or pedophilia because of the fear of being put on some kind of list or someone, somewhere linking those terms to them.

People who may be having obsessive, undesired pedophilic thoughts or succumbing to sexual fantasies and feeling guilty or stressed about it may be in desperate need of information or support from charities or support groups would likely feel reluctant to query google for said resources, and may take matters into their own hands, often inventing maladaptive coping strategies built around sexual repression or denial which ultimately serve to cause further harm or even further the risk of committing an offense against a minor.

Those who may be interested in the scientific literature and studies of how adult-child sex may negatively affect a child’s psychology feel reluctant to google it due to the nature of it alone, so they may simply settle with what society tells them, often with hearsay, conjecture, and an aggressive stigma or other conformist rhetoric, rather than an objective, empirically-sound and valid account based on facts and data.

The former is, of course, the wrong way to go about learning about child sexual exploitation. The fact that a sizeable portion of the population simply assumes that CSAM is illegal because it’s “icky” or “offensive” or because they believe it “may cause pedos to go out and abuse children”, rather than out of a legitimate interest in the child victims who were involved in the production of said materials and the necessity to eradicate the market for said abuse, is indicative of both an overall lack of knowledge and understanding regarding pedophilia, pornography, their effects on one another and how they relate to CSA, and perhaps even further telling of where their true interests or concerns lie, whereby rather than concern for that of the safety and wellbeing of children, it’d be their personal feelings or the need to blindly conform to a popular or cultural standard.

These are both bad because, should any specific examples or categories of CSAM fail to arouse offense, or rather, the standards and viewpoints themselves bend and warp to accommodate and welcome said categories or examples of CSAM, then at that point child sexual exploitation would officially become systemic, and such a reality would, of course, be antithetical to the interests in protecting minors from abuse and punishing those who abuse them.

The rights of children to be free from sexual abuse and exploitation is not contingent on flimsy norms, personal feelings, or assumed popular ideals, rather, they are absolute. This absolute is justified by the vast amounts of empirical data, which consistently shows a causal relationship between stress, trauma, psychological and developmental conditions and adult-child sexual activity.

A person might argue that such things would never be seen as “inoffensive”, but that’s not really true. The main reason why “child pornography” is its own separate category of speech from “obscenity” is because the obscenity doctrine and its laws were not designed to address sexual exploitation, rather, the doctrine was designed solely to appease prudes and quelch certain types of sexually-oriented expression, and the SCOTUS felt that CP warranted its own category.

This is primarily because a child pornographer could evade prosecution if a judge or jury believed the matter wasn’t obscene. Children and young teens could be filmed or photographed performing erotic or sexual acts, such as genital-on-genital penitration, lascivious poses or even oral sex on adults or actors of a similar age, so long as the judge or jury either didn’t find the material “patently offensive” under state law or believed it to have “serious…value”.

They knew that the arbitrary, vague. and superficial standard they carved out in Roth/Miller couldn’t be relied on to actually prevent or punish harm.

All of this is relevant to the need to preserve user privacy because people need to know that they won’t be punished for being curious about controversial issues or subject matter, they need to feel comfortable and confident, but most of all, safe.

Sure, one could argue that all of these truths and facts could be held and maintained alongside client-side CSAM detection regimes, yet such an argument still overlooks the implicit value of true privacy. If it were possible to implement localized client-side CSAM detection regimes without affecting user privacy, I would be all in favor of it.

@terminus I hope you guys know what you’re doing with this.

This is a very delicate matter, and I wouldn’t want to see Prostasia’s stance against Apple’s client-side CSAM detection be used against us, either to discredit the charity and its community of activists and scientists or our arguments and empirical research.