You’re not really in a position to demand anything. If you were to ask respectfully, I’d be happy to explain:

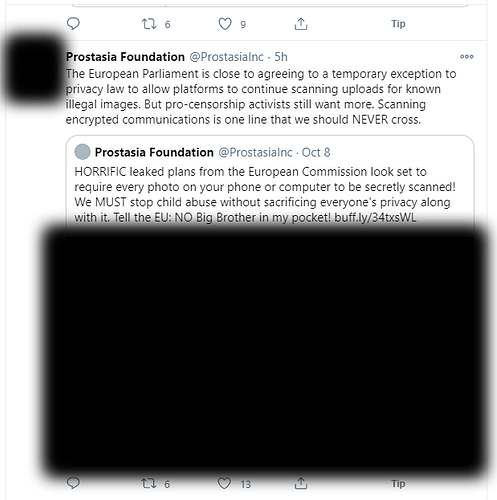

The compromise that you are suggesting is similar to what the European Commission is already considering: they propose that images should be encoded on your device, sent to a server for scanning, and then only if they match a known image of CSAM, it would be reported.

The problem with this is that it can’t, technically, be limited to just CSAM. Enabling the behavior described above would allow anything to be scanned, which would defeat the purpose of end-to-end encryption. For example, leaked documents could be added to the CSAM database, enabling whistleblowers like Edward Snowden to be caught.

Now, if the CSAM databases were maintained in a completely transparent and accountable process, then this might be less of a concern—but they’re not, and therein lies the problem not just for us, but also for the European Parliament. Ultimately, Europe is planning to bring in a much more strictly regulated regime for maintaining the CSAM databases. This may change some things, but we’ll have to see.

Anyway, that is years away, and in the meantime this dilemma can be addressed immediately by moving some of our resources away from surveillance and censorship technologies, and towards prevention interventions. There is much more that we can do right now to prevent the perpetration of CSAM offenses, that don’t require any surveillance or censorship.

As for grooming, we just published an article about this.

And once again, enough with this “explain yourself” bullshit. Please address others on this forum respectfully and assume good faith.